13 Benefits of UI/UX Design for Small Businesses

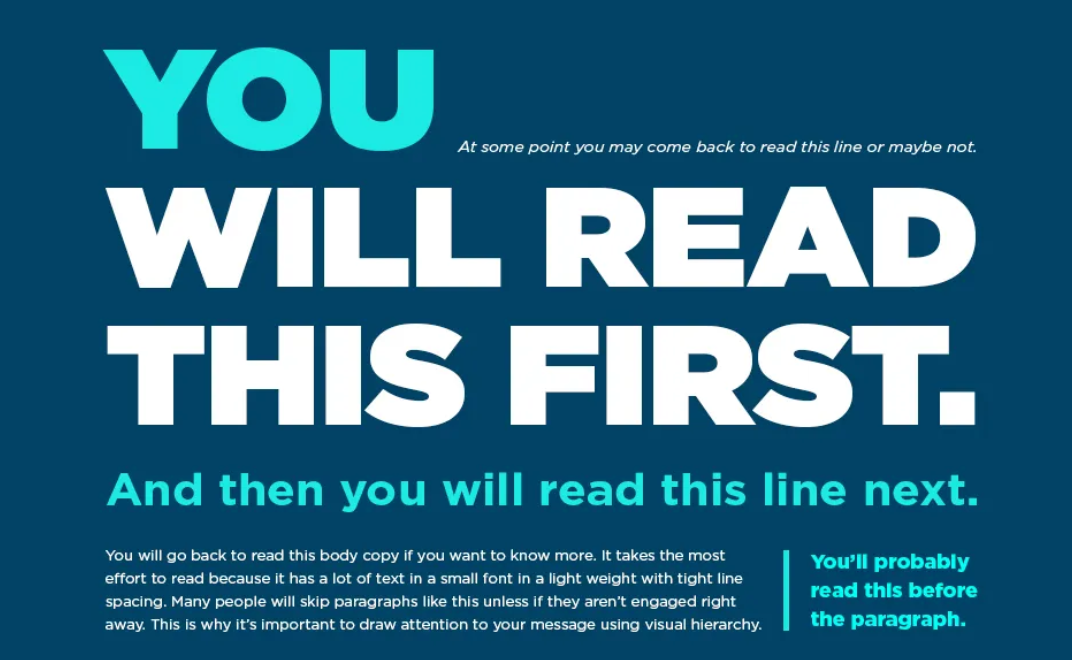

The modern-day shopper looks for more than just products and information. They want to associate with brands that identify and interact with them. As a small business striving to cut through the digital crowd, your best ticket is an excellent UI/UX design for your website. This approach will help...