You build a polished, user-friendly AI product—just what stakeholders wanted.

But post-launch, cracks appear. Users get confused, frustrated, and stop trusting the system. Sound familiar?

That’s because most teams focus only on the UI, while the real issues lie deeper. In AI products, success depends on what’s beneath the surface—how your system handles uncertainty, errors, and trust.

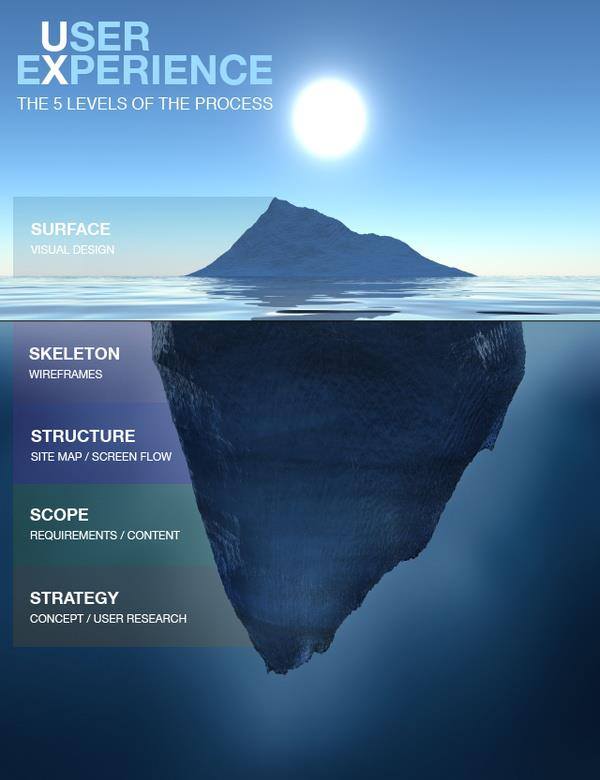

The Iceberg UX model highlights this, showing that 90% of the UX is invisible. After working on 20+ AI products last year, our team at Bricx have seen recurring patterns that: build or break trust.

This article breaks down how to design AI-first interfaces and how leveraging the Iceberg UX model can help you create products that feel reliable, adaptive, and usable.

The Emergence of AI-First Systems

Previously, UX was mostly synonymous with UI, the visible layer defining how users interacted with a product. Design teams spent hours crafting pixel-perfect layouts, ensuring everything from color schemes to button placements was just right.

The goal was clear: Make things look good on the surface. However, the emergence of GenAI completely flipped the script.

AI-first tools like ChatGPT, Gemini & Claude now prioritize smooth interactions & functions through conversational methods, with the UI becoming minimalistic – or even invisible.

How Does the ‘Iceberg UX Model’ Solve This?

Think about the iceberg analogy, where the UI is just the iceberg’s tip, floating above the surface. But underneath, there’s a massive, hidden structure that constitutes 90% of your user experience.

In the context of AI, this is where everything happens: the logic, failure handling & feedback mechanisms necessary for creating experiences that feel more intuitive and reliable.

If your AI doesn’t explain how it makes decisions, or if it can’t recover gracefully from an error, users will start to question the output’s reliability & usually stop using your product.

Adopting the iceberg UX model helps your product team shift from over-focusing on the aesthetics to actually designing for invisible layers that keep the AI running smoothly in the background.

3 Key Layers of A Great AI User Experience

When designing AI-first products, it’s essential to go beyond just the interface and think about the 3 key layers that shape the user experience.

- The Surface (UI)

The first or ‘surface’ layer usually consists of the visible elements or the UI. This is where most teams start their design process, since it’s the most tangible and easy to approach.

In the first layer, teams usually build elements like the following first up:

- Inputs: Text fields, dropdowns, and checkboxes where users provide data.

- Sliders: Controls that adjust parameters or settings within the AI system.

- Tone Switchers: UI components that allow users to change the tone of AI-generated responses.

- Chat Prompts: Input boxes for conversations with AI systems.

The problem here is that while flashy interfaces often look great, they’re also sometimes used to mask the underlying issues with AI behavior. Many teams fall into the trap of overemphasizing the UI, focusing more on aesthetics than critical, behind-the-scenes UX issues.

As per the iceberg UX model, the UI alone isn’t enough for a positive user experience.

Design teams must create interfaces that simplify interactions & set realistic expectations, while also being transparent about AI behavior.

- The ‘Invisible’ Experience

What happens when the AI gives wrong or vague results? Or even worse, when it hallucinates? (i.e. – creating an entirely inaccurate response)? If left unaccounted for, these moments can easily break user trust. This is what the second layer is all about.

At our UX agency, we often refer to this as the “invisible experience” – or the aspects of a product which users don’t directly interact with, but feel the impact of, especially when something goes wrong.

This is where creating fallback flows & undo patterns helps users recover quickly from errors & continue smoothly. Confidence indicators (such as a “How sure is the AI?” meter) show the reliability of the output.

If the AI is unsure, let users know. Clarifying prompts and model nudges further guide users towards more accurate inputs, reducing confusion & improving trust.

By designing for these invisible layers, you’re ensuring that the AI doesn’t just work when it’s perfect but also handles the imperfections gracefully.

- System Trust

The third layer in the iceberg UX model focuses on system trust, which is the foundation of any successful AI product. Now, AI is inherently unpredictable, and can produce wrong or unexpected results.

It’s upon the designers to ensure the user still feels confident in such moments, otherwise they’ll abandon the product.

This can be done by giving users just enough information about how the AI works, without drowning in the technicalities. Take Google Assistant for example.

When it doesn’t understand something, it simply says: “Sorry I didn’t get that. Would you like to try again?” This act of transparency, however small, reassures the user, helping them understand why the AI is struggling.

Adding feedback loops to the mix is also crucial. Asking users questions like: “Was this response helpful?” or offering thumbs up (or down) feedback lets the AI learn and improve.

Lastly, predictability also matters. Rather than hiding the AI’s inner workings, make it clear how the AI operates.

Spotify often does this with their personalized playlists, giving users a sense of how those recommendations were made.

When users can predict how the AI behaves, they feel more in control and trust the system more.

Real Mistakes Teams Make With AI UX

Despite the importance of the iceberg UX model, many AI product teams still fall into common traps that lead to poor user experiences.

Here’s a list of the most common ones we’ve noticed:

- Focusing only on UI

Product teams often fall into the trap of over-prioritizing the visual design of their product – thinking that a polished, sleek UI is enough to ensure a positive user experience. While the user interface is critical for first impressions, it’s just the tip of the iceberg in AI-first products.

The real challenge lies in creating the invisible layers defined above, especially the error handling, feedback and failure recovery mechanisms. If these elements are not meticulously planned, things can quickly unravel.

Without these underlying systems, AI products can fail, leading to user frustration and abandonment.

- No Recovery Plan for Failed Outputs

Launching an AI product with a stunning interface is just one part of the equation. What happens when the AI doesn’t work as expected?

Too often, teams neglect to plan for scenarios where the AI gives incorrect or unclear outputs, leaving users confused and frustrated. No recovery plan means users are left with no way to easily recover or proceed, which can cause them to abandon the product entirely.

Whether it’s an error message, a failed recommendation, or an unintended action, it’s crucial to design fallback flows that provide a clear path forward for users, allowing them to continue their interaction or correct the AI’s course.

- Over-relying on developers

A common mistake in AI-first product development is the mentality of “we’ll fix it later.” Some teams assume that the AI will improve post-launch and that they can leave certain things up to the developers after release.

This approach often leads to an ongoing cycle of poor user experiences and low retention rates. Waiting for developers to fine-tune the model or correct failures is a reactive strategy, not a proactive one.

By leveraging the iceberg UX model, you’re accounting for both the visible & invisible layers right at the start. This involves anticipating potential failures and addressing them early in the design process, rather than relying on developers to fix issues later.

Prototyping error handling, designing for edge cases, and creating user flows that guide the AI in less-than-ideal scenarios should be part of the design process from day one, much before any AI model goes live.

- Skipping research or ignoring edge cases

It’s tempting to design for the “happy path”—the ideal scenario where everything runs smoothly, the AI behaves perfectly, and users have no problems. But in the real world, things rarely go as planned.

While creating your AI product, you need to account for edge cases (such as AI misinterpretations, unclear user inputs & hallucinations or language confusion), since they can be a dealbreaker if left unchecked.

When you fail to consider how the system should react to ambiguous inputs or unexpected behavior, you risk frustrating users and losing their trust.

To solve this, you need to understand the “as-is” process of your users (without your product), mapping their journey & analyzing if you actually fulfill their requirements.

You can also leverage the iceberg UX model to prototype and test for all such edge cases early on.

By doing this, you’re ensuring that your product works well in all scenarios, not just the ideal ones.

A Practical UX Workflow for AI-First Products

Now, I’ve already talked about the approach & mistakes one needs to avoid while designing AI products. But what does the ideal UX workflow look like in such a scenario?

Well here’s how you can put the iceberg UX model for your AI into practice:

- Starting with user needs

AI products often end up showcasing model capabilities way before addressing what the users actually need. Now, while it’s tempting to lean into what the AI can do, the key is to first understand how your AI will serve users.

A good example of this would be Socialsonic, the LinkedIn personal branding assistant we worked with a while ago.

Instead of starting with the AI’s features, we began by identifying the pain points users faced with LinkedIn content creation.

We also focused on understanding the challenges — before eventually answering how the AI could achieve the user’s desired outcomes.

- Mapping out failure states & fallback flows

Next up, you must design for the inevitable failures. AI isn’t perfect, so outline all the potential failure states (e.g., incorrect outputs, misunderstood user queries) and design fallback flows. This ensures users aren’t left stranded when things go wrong.

A great example of a real-world application following the iceberg UX model is the language learning app Duolingo. When a user gives a wrong user, the app doesn’t mark it incorrect – rather explains the mistake. By giving them a chance to try again (and also dropping hints), the app guides users back on track, instead of leaving them confused.

This fallback flow not only educates but builds trust by showing the system’s understanding of where the user went wrong.

- Testing confidence indicators & trust patterns

When designing AI products, confidence indicators and trust patterns are what will help you address one of the core challenges in this domain: uncertainty.

AI systems are inherently unpredictable, and a user needs to know when the system is uncertain about its response. Testing confidence indicators means experimenting with how much transparency to provide about the AI’s confidence in its output.

For example, if an AI tool like a content generator suggests a topic or piece of content, showing a simple confidence meter (e.g., “Confidence: 70%”) can give users a sense of whether they should trust or question the result.

As AI becomes more prevalent, users expect transparency, and by testing and refining these indicators, you can create more trustworthy, reliable AI products that users will stick with over time.

- Build layers first, UI later

When designing AI-first products, the app UI should be the last piece of the puzzle. Before focusing on the visual design, solidify the invisible layers that shape the user experience.

These include understanding user needs, mapping out failure states, addressing edge cases, and building trust with the system.

Only once these foundational elements are in place should you move on to designing the UI, which should complement the established system, not just sit on top of it.

This approach ensures a robust and resilient AI product, rather than one built on superficial design.

- Leverage cross-functional collaboration

AI products are inherently complex, requiring input from multiple teams for success. UX teams shouldn’t work in isolation, collaborating closely with ML, data & product teams.

This is especially crucial when applying the iceberg UX approach, which emphasizes both the visible & invisible layers. Regular cross-functional checkpoints ensure that the AI’s model logic, UX flows and product goals align.

For example, collaborating with the ML team helps UX designers understand the model’s limitations, while working with data scientists allows for fine-tuning outputs based on real-world data.

Continuous collaboration helps adapt the UX in real-time, ensuring that the AI system is not only functional but also meets user expectations.

- Design for uncertainty, not just usability

AI systems are inherently unpredictable, so designing AI-first products means focusing on uncertainty, not just usability. The Iceberg UX Model emphasizes that AI products must be resilient and adaptive – seamlessly handling failures or unexpected results.

Instead of assuming perfect AI behavior, designers should plan for fallback flows and confidence indicators to show users how certain the AI is about its output.

Allowing users to modify or retry their inputs helps them regain control when things go wrong. By designing for uncertainty, you ensure the AI remains trustworthy and reliable, even in unpredictable scenarios.

Conclusion

The iceberg UX model isn’t about over-engineering, but designing AI-first products more responsibly. It might seem like adding all these invisible layers overcomplicates things, the truth is – these elements are essential for delivering value to the end-user.

AI systems don’t always work perfectly, and planning for errors, uncertainty, and user trust can be the difference between success and failure. Moreover, it’s not just the final product that benefits from this approach – but also the MVP versions.

When used correctly, the iceberg UX model can: help build trust early, reduce post-launch support and improve retention rates.

- 8 Key UX Research Trends Shaping 2025 (and What to Watch in 2026) - November 10, 2025

- 9 Ways to Use AI for UX Design & Stay Ahead of the Curve - October 20, 2025

- The Iceberg UX Model: Designing for AI-First Interfaces - September 9, 2025

![]() Give feedback about this article

Give feedback about this article

Were sorry to hear about that, give us a chance to improve.

Error: Contact form not found.