Image Source: Freepik

Remote usability testing often breaks down long before the first participant clicks anything. And it rarely collapses because of flawed design or complicated tasks. It actually fails because teams hand participants a poorly organized assortment of links, half-complete instructions, mismatched prototype versions, and test accounts that may or may not work. The entire experience becomes a digital obstacle course. Participants hesitate, moderators scramble, and teams walk away with diluted insights.

It is easy to assume these issues are an unavoidable part of remote testing. They are not. They stem from weak asset preparation, something fully within your control. When the materials behind a study are organized with intention, remote sessions shift from chaotic to controlled. When they are not, even strong research plans turn unpredictable.

We’ll explore how better asset collection removes friction, raises data quality, and transforms the remote research experience for everyone involved. The goal is simple: help you run smoother, more confident, more reliable usability studies.

Why Asset Collection Deserves More Attention Than It Gets

Remote usability studies appear straightforward: choose participants, assign tasks, observe behaviors, and collect findings. The process, however, assumes that participants receive everything they need in a clean, cohesive package. That’s not how it works in reality; the test environment is far more fragmented. Participants join from different devices and browsers, using networks and configurations that researchers cannot see. Without in-person guidance, even minor preparation gaps cast long shadows.

A big part of the friction researchers encounter has nothing to do with the product being tested. It comes from the environment surrounding the test itself, the variability of devices, networks, and participant setups. This pattern has been repeatedly observed in comparative studies of remote and in-person testing, including an empirical analysis that found environmental inconsistencies to be a primary cause of usability issues in remote sessions. Treating asset collection as a logistical chore rather than a strategic layer of research is what allows friction to flourish.

Before going deeper, let’s establish a clear foundation.

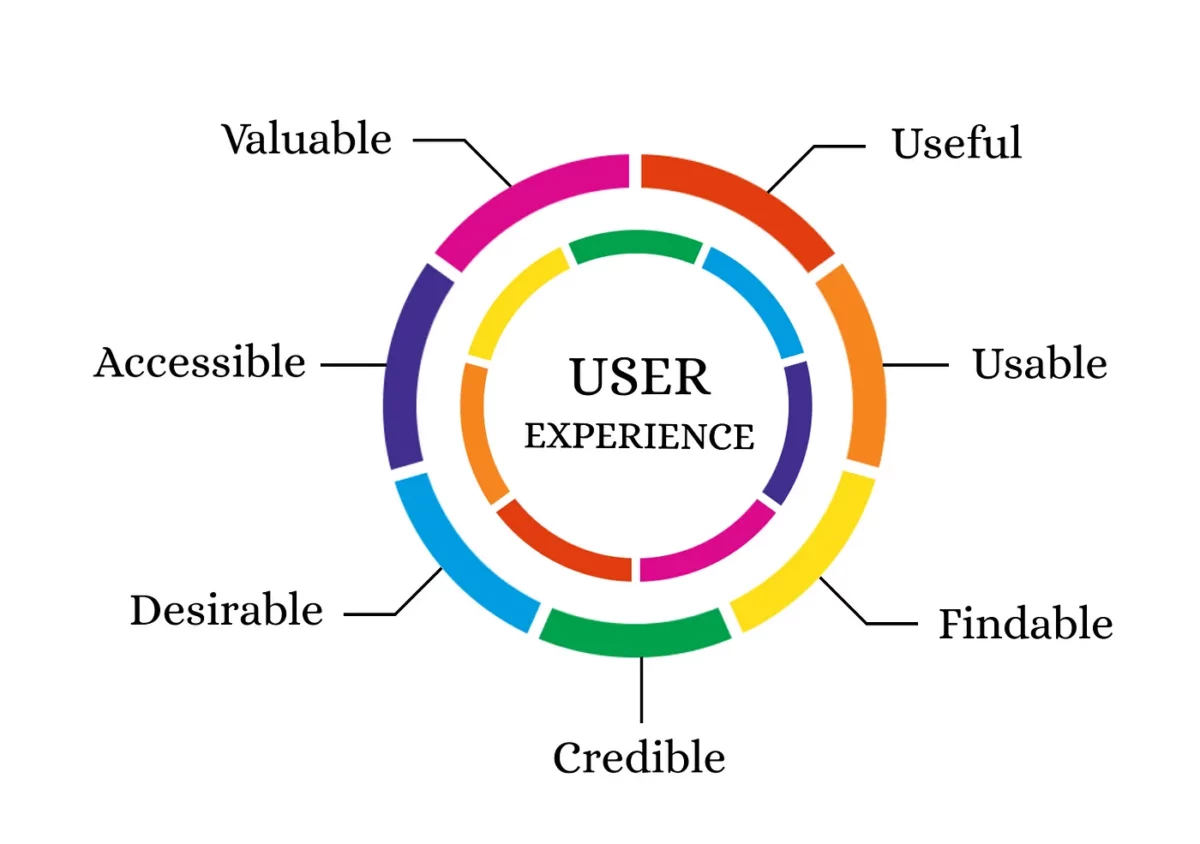

What Usability Studies Actually Aim to Discover

Usability studies examine how real users interact with a product to uncover obstacles, uncertainties, or unmet expectations. They help teams understand why a call-to-action goes unnoticed, why a checkout flow feels too complicated, or why onboarding leaves users confused. These sessions reveal behavioral patterns that analytics alone can’t capture. If they are conducted remotely, they provide access to a more natural, distraction-filled environment that resembles real use.

What Asset Collection Means in This Context

Asset collection is the process of gathering, organizing, and preparing elements that are needed for a usability testing session. Prototypes, links, instructions, reference materials, login details, moderator scripts, backup files, and any technical prerequisites. Needless to say, this is the foundation on which the entire research experience is built. If you execute your asset collection meticulously, it strengthens study reliability. Neglected, it creates friction that participants feel immediately.

Where Friction Typically Begins

There are several predictable failure points that appear across remote studies. Assets stored in inconsistent locations lead to confusion. Which version is correct? Instructions written in overly technical language make participants second-guess simple actions. Prototypes that haven’t been tested under real-world conditions create disruptions more often than not. And if research teams prepare each session differently, you can expect inconsistencies that will distort insights. These issues may start small, but they multiply quickly in remote environments where teams lack real-time control, clear visibility into work patterns and accurate performance data to guide decisions

The Path Toward Frictionless Remote Usability Studies

The good news is that friction is not inevitable. By strengthening asset collection, you can proactively reduce confusion, increase participant confidence, and create a smoother testing workflow. The following strategies have been repeatedly validated by teams running moderated and unmoderated studies across a range of industries. Each emphasizes stability, clarity, and predictability, qualities that remote research depends on.

1. Create a Single Source of Truth Before Recruiting Anyone

The biggest operational bottleneck in remote usability testing comes from fragmented asset storage. When files live across Slack threads, personal drives, email attachments, and outdated documents, version drift becomes unavoidable. Teams waste time asking where the “latest” instructions are, and participants receive materials that feel inconsistent or incomplete. The fastest way to eliminate this recurring friction is to build a centralized asset hub before any recruitment begins.

This hub can live in Notion, Confluence, or a structured shared drive, as long as the format is clear and enforced across the research team. The hub should contain every prototype link labeled with version numbers, participant instructions, moderator scripts, test credentials, and troubleshooting guidelines. Creating a single source of truth turns the preparation phase into a controlled, repeatable process rather than a scramble for files. Teams that adopt this approach often observe a significant decline in participant setup issues because participants receive organized, consistent materials every time.

2. Write Participant Instructions That Feel Simple, Direct, and Unambiguous

Participants do not struggle because the tasks are inherently complex. They struggle because instructions leave too much room for interpretation. Long or technical phrasing increases hesitation and creates artificial difficulty that distorts research results. Clear, minimal instructions promote confidence and encourage participants to dive into the task rather than second-guess what the research team expects.

Studies consistently show that shorter, more direct task prompts lead to faster task initiation and fewer clarifying questions. Instructions should be written after the final prototype version is locked to prevent mismatches. They should be written in plain language with a single action per sentence whenever possible. That matters even more for multi-step paths like accelerated nursing programs, where participants can get lost before the task starts. A brief warm-up exercise helps validate that participants can access the environment before formal testing begins, preventing early disruptions.

Below are the practical techniques for crafting friction-free instructions:

- Use direct, assertive phrasing that tells participants exactly what action to take.

- Focus each task on a single verb to remove ambiguity.

- Write task instructions only after confirming the prototype’s final structure.

- Include a short warm-up task to confirm access and reduce early stress.

- Add minimal visual cues, such as small screenshots, when a step involves navigating a new area.

These practices reduce hesitation and create a participant experience that feels smooth and intentional.

3. Standardize Session Preparation So Every Moderator Works From the Same Foundation

One of the silent causes of friction is inconsistent preparation among moderators. Even when using the same prototype, teams sometimes vary in how they set up each session. One moderator may test the prototype links in advance, while another relies on assumptions. One may clear their browser state, while another keeps prior login information stored. These inconsistencies can influence participant performance and create discrepancies in collected data.

A standardized preparation checklist ensures that each moderator begins with the same baseline. The goal is stability, not only for the participant experience but for the quality of insights. With uniform preparation, unexpected variations shrink, and patterns in user behavior become easier to interpret. Here are the high-value elements to include in a preparation checklist:

- Verify that participants have active permissions for all linked prototypes.

- Test user flows in a clean browser environment using test accounts.

- Reset browser state to prevent autofill or cookie interference.

- Upload the moderator script and task guide to the central hub.

- Store a backup prototype link in case technical issues arise.

Each item adds a layer of control that strengthens study reliability.

4. Deliver Everything Participants Need in One Clear, Cohesive Package

A common source of friction appears before the study even begins: participants receive multiple emails, links, and instructions scattered across several platforms. This fragmentation increases the likelihood that participants arrive unsure about what to do or where to begin. A single, consolidated message improves clarity and confidence.

This message should include access instructions, prototype links, backup links, credentials, expectations, and a brief device-readiness step. When all information arrives in one cohesive package, participants are more prepared and significantly less anxious. This preparation also improves session momentum, allowing moderators to spend less time on logistics and more time observing meaningful behavior.

5. Choose Tools That Strengthen Your Workflow Rather Than Complicate It

Technology plays a large role in remote research, but tools must be selected with purpose. Asset organization platforms like Notion and Confluence can help create consistent documentation environments. Design tools like Figma will allow you to maintain clear versioning and control access rights.

For teams testing marketing workflows or promotional flows, assets generated through an AI ad maker can also be included early in the asset hub to ensure consistency across creatives used in usability studies.

You can use Maze to validate task flows before real participants interact with them. Moderation and recording tools, Lookback and UserTesting, for example, will give you structured ways to distribute materials and capture sessions with minimal disruption.

But teams often overlook an equally important category: tools that streamline the collection of assets from stakeholders before the research begins. If you are testing a workflow-heavy product where document exchange, task clarity, and approval workflows are core to the experience, using a tool like Content Snare can simplify how you gather the inputs required for your prototype. It provides a structured, centralized way to collect materials, files, and instructions from multiple people without chasing them across email threads.

6. Run Internal Pilot Sessions Using Someone Unfamiliar With the Asset Setup

A pilot session offers the fastest path to uncovering hidden friction, especially when the person running through the tasks has not been closely involved in creating the prototype. When someone encounters the instructions, the links, and the environment for the first time, they surface gaps that the core team has grown blind to. Running the pilot under the same conditions as the real study allows you to identify confusing phrasing, access issues, or prototype instability before interacting with actual participants.

Pilots reduce surprises and improve the predictability of your remote sessions.

7. Prepare for Device, Browser, and Network Variability

Remote usability studies inherently involve uncertainty. Participants join using different devices, browsers, and security settings. Some use corporate networks with restrictions that interfere with prototypes. Others use older hardware that affects performance. Designing around these variables reduces disruptions that would otherwise derail your study.

Providing alternative access paths, offering troubleshooting guidance, keeping lightweight backups of important assets, and testing prototypes in multiple browsers all contribute to a more resilient study environment. When you anticipate technical variability, your study remains stable even when unexpected issues arise.

The Core Insight: Better Asset Collection Leads to Clearer, Stronger Results

Remote usability studies often falter because the surrounding materials are not designed with the same intentionality as the product being tested. Asset collection is not a secondary task. It is a structural layer of research that determines participant confidence, session stability, and the clarity of insights.

When assets are organized, consistent, and thoughtfully presented, participants move through tasks with ease, and moderators guide sessions with confidence. When the asset layer is weak, sessions feel disjointed, and insights lose their sharpness. Improving asset collection strengthens every stage of the research process.

Final Recap

Here are the essential principles to remember as you redesign your approach to remote usability testing:

Clear and organized asset preparation will remove sources of confusion that often overshadow the tasks themselves. A single asset hub brings order to materials that otherwise scatter across tools and teams. Direct and concise participant instructions will significantly reduce hesitation and keep sessions flowing naturally. Standardized preparation across moderators produces more reliable session outcomes. Thoughtfully selected tools will help you reinforce strong processes rather than compensate for disorganized ones. And internal pilots reveal hidden friction before real participants encounter it.

Remember, remote research becomes smoother, more predictable, and more insightful when asset collection is taken seriously.

- How to Reduce Friction in Remote Usability Studies Through Better Asset Collection - January 5, 2026

- Optimizing the UX of Your Online Store from First Click to First Sale - December 1, 2025

- How Incentives and Automation Shape the Future of User Experience - October 27, 2025

![]() Give feedback about this article

Give feedback about this article

Were sorry to hear about that, give us a chance to improve.

Error: Contact form not found.