Why founders are ditching the “build it and they will come” mindset for rapid research cycles that get answers

Traditional UX research has a startup problem: it takes too long and costs too much. When you’re racing toward product-market fit with limited runway, waiting 3-6 weeks for user insights often means building first and validating later – a recipe for expensive mistakes.

What if you could get the insights that matter in just 48 hours? What if you could validate your most critical assumptions fast enough to change direction before you’ve committed significant development resources?

At Lumi Studio, we’ve helped dozens of startups validate critical assumptions and reach product-market fit faster through rapid research cycles. Here’s the framework we use to compress weeks of traditional research into 48 hours of actionable insights. ⬇️

Why the 48 hour strategy is genius

Think about the last time you spent weeks analyzing a decision.

Did all that extra thinking time actually lead to a better choice, or did you just end up more confused and second-guessing yourself?

Research from cognitive psychology and behavioral economics suggests the latter. Here’s what happens to our brains during extended decision-making periods:

Decision fatigue: Our decision-making quality declines as we make more choices and our cognitive abilities get worn out. Extended decision-making periods lead to progressively worse choices.

What this means for your startup: Every day analyzing research adds more decisions. Should we test this variation? What about that edge case? How many more users? By day three, your team isn’t making better decisions – you’re deciding while mentally exhausted.

→ The deadline forces focus on decisions that matter while your cognitive resources are fresh. You capture collaborative energy before research fatigue sets in, and insights stay connected to current priorities instead of becoming outdated by the time you act on them.

You’ll find yourself asking better questions:

- “What’s the one thing we most need to know?”

- “Which user behavior would most surprise us?”

- “What assumption, if wrong, changes everything?”

How to ask the right questions in UX research sprints

Research time is precious. The questions you choose to answer in your 48-hour sprint should be the ones that most directly influence your next strategic decisions.

The key is understanding which assumptions pose existential risks versus those that simply limit your growth potential. ⬇️

Priority 1: Business-critical assumptions

These assumptions sit at the foundation of your entire business model. If any of these prove false, you’re not optimizing a working product – you’re polishing something fundamentally broken.

“Do users actually experience the problem we think we’re solving?” Whether your specific target users feel this pain point acutely enough to care about a solution.

“Is our approach compelling enough to change their current behavior?” People don’t abandon established habits easily. Your solution needs to be significantly better than their status quo.

“Can real users complete our core workflow successfully?” Your team designed the experience, so of course it makes sense to you. The question is whether people who didn’t build it can navigate it intuitively.

“Will users pay for the value we provide?” This tests whether your value proposition translates into willingness to exchange money for your solution – the ultimate validation of product-market fit.

If you can only test four assumptions in your entire sprint, test these four. Everything else can wait until you know your business model actually works.

Priority 2: Growth-limiting factors

Once you’ve validated basic viability, these assumptions determine how efficiently you can scale.

“Do users understand our advantage over existing solutions?” If users view you as interchangeable with competitors, you’ll compete solely on price or convenience.

“Can new users achieve early success without hand-holding?” Your onboarding process needs to create quick wins that build confidence. Users who struggle initially rarely become long-term customers.

“Do satisfied users naturally recommend us to others?” Word-of-mouth growth requires users who are genuinely excited about sharing their positive experience.

Test these once you’re confident in your Priority 1 assumptions.

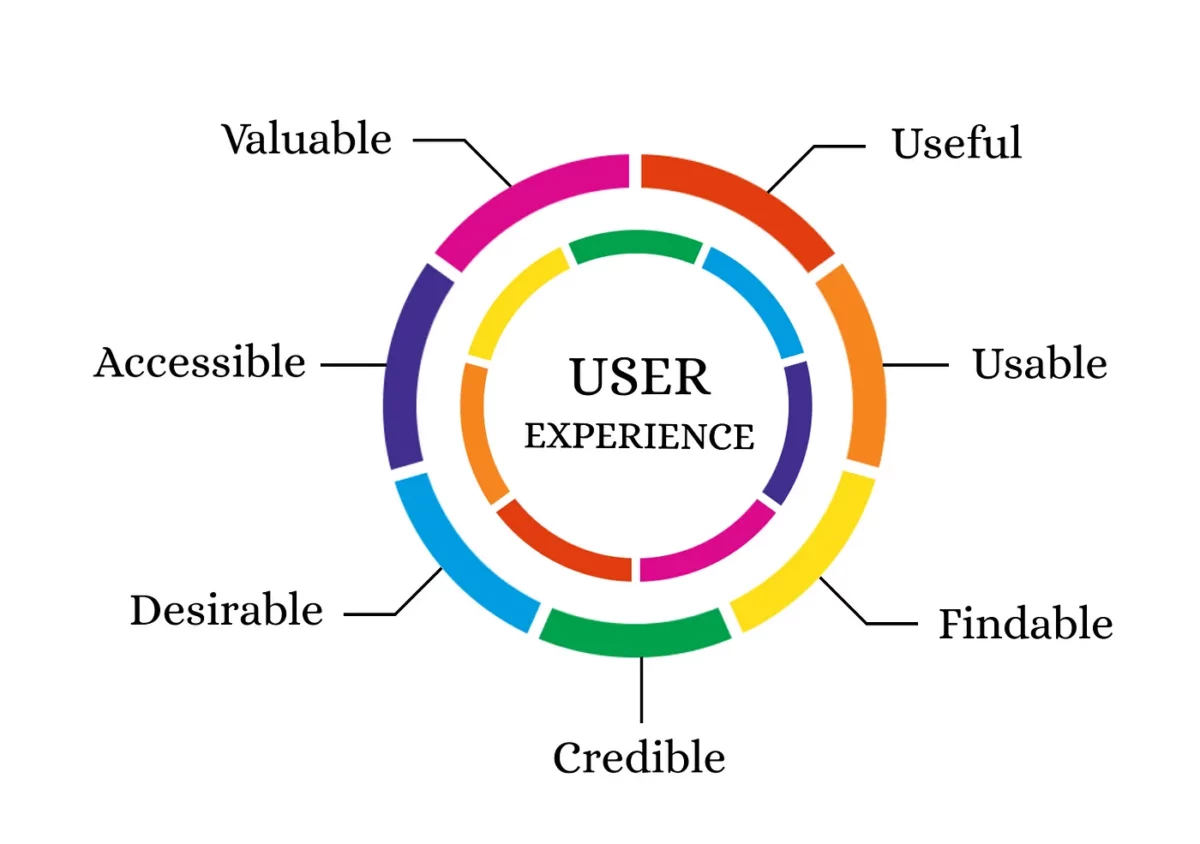

Priority 3: Experience optimization

These assumptions affect user satisfaction and competitive positioning but don’t threaten core business viability. They’re important for long-term success but dangerous to prioritize when survival is uncertain.

- Do users prefer specific interface designs or visual styles?

- Which advanced features would add meaningful value?

- How do users respond to different brand messaging approaches?

How to choose your sprint focus

With limited research time, you need a simple decision framework. Ask yourself: “If this assumption proves wrong, what happens?”

If the answer is “we need to fundamentally rethink our approach” → Priority 1, test immediately

If the answer is “we need to adjust our strategy” → Priority 2, test after survival is confirmed

If the answer is “we need to refine our execution” → Priority 3, test when resources allow

Users don’t care if you have the perfect button color if your core product doesn’t solve their problem. Fix what prevents success before optimizing what enhances it.

A step-by-step UX sprint implementation guide

Running your first 48-hour research sprint can feel overwhelming, especially when you’re used to research projects that sprawl across weeks or months.

But the compressed timeline is actually what makes these sprints so effective. Here’s exactly how to execute one. ⬇️

Why we choose Loop11 for our research sprints

After testing multiple research platforms while building products at Lumi, we discovered that most tools are designed for a different reality – teams with dedicated researchers, patient stakeholders, and generous timelines. Startup research needs something fundamentally different.

So why is Loop11 our favorite tool for 48 hour sprints?

Because it lets us implement fast without code

When you’re iterating on your product weekly, the last thing you want is research infrastructure becoming a bottleneck.

We’ve watched too many product teams lose momentum because their research tool required developer time to set up tests, integrate tracking, or modify code for each study.

How this changes your sprint planning: Instead of spending day one on technical setup, you can launch tests within minutes of identifying your research questions.

This speed transforms how you think about research timing. Instead of research being a separate project with its own timeline, it becomes a natural extension of your product development process, something you can spin up instantly when questions arise.

Because the AI analysis saves us days, not hours

The traditional research bottleneck isn’t data collection, it’s analysis.

Spend Monday conducting brilliant user sessions, then lose Tuesday and Wednesday manually transcribing recordings and hunting for patterns. By Thursday, when you finally present insights, your team has already moved on to new priorities.

Loop11’s AI identifies patterns across sessions, flags emotional peaks and confusion moments, and surfaces insights that manual analysis might miss.

Because the research can scale with startup needs

A lot of UX research methods force impossible tradeoffs. Interview 8 people for deep insights but wonder if their experiences represent broader patterns. Survey 100 people for statistical confidence but miss the emotional context behind their responses.

We start sprints with 5-6 moderated sessions to understand the emotional and contextual factors behind user behavior.

→ Why do people abandon our onboarding at step 3? What are they thinking when they hesitate before clicking that button? These conversations give us the “why” behind user actions.

Then we scale those insights with unmoderated testing across 50+ users to validate patterns and measure the magnitude of issues we discovered.

→ Did that confusion we saw in interviews affect most users, or just a vocal few? How many people actually complete the flow successfully?

This hybrid approach gives you both nuanced understanding and statistical confidence within the same 48-hour window. You’re not guessing whether insights from interviews represent broader user behavior, you’re validating them at scale before your sprint ends.

How to setup a 48 hour UX sprint

Before you talk to a single user, you need clarity on what you’re actually trying to learn and who needs to hear the answer.

Identify your single critical assumption

Start with this question: “What’s the one thing that, if we’re wrong about it, means we need to fundamentally rethink our approach?”

Time constraints force focus. When you try to test multiple assumptions simultaneously, you end up with surface-level insights about everything and deep understanding of nothing.

Examples of critical assumptions:

- “Users understand what problem we’re solving”

- “Our solution is worth changing established habits”

- “People will pay for the improvement we provide”

Write your assumption down and post it where everyone can see it. Every research question should connect back to validating or invalidating this core belief.

Assemble your decision-making team

Research insights only matter if someone can act on them. Involve the people who have authority to implement changes based on what you learn.

Core team members:

- Founder or product lead: Can make strategic pivots

- Lead developer: Understands implementation complexity

- 1-2 early customers: Provide reality checks on findings

You need someone who can change strategy (founder), someone who can change implementation (developer), and someone who represents real user perspective (customers).

Set up your research infrastructure

Prepare both moderated and unmoderated research streams before your sprint begins. This parallel approach gives you depth and scale within your compressed timeline.

Moderated setup:

- 5-8 scheduled sessions across both days

- Interview guide focused on your critical assumption

- Screen sharing and recording configured

- Stakeholder observation access arranged

Unmoderated setup:

- Task scenarios that test your assumption behaviorally

- Success metrics defined (completion rates, time on task, etc.)

- Participant screening questions to ensure relevance

This dual approach means you’ll understand both what users think (moderated) and what they actually do (unmoderated) about your assumption.

Recruit the right participants

Plan for 15-20 total participants with a 20% cancellation buffer. Quality matters more than quantity, so it’s better to have 6 perfect participants than 12 mediocre ones.

Moderated participants (5-8 people):

- Match your target user profile precisely

- Available for 45-60 minute sessions

- Comfortable thinking aloud during tasks

Unmoderated participants (10-15 people):

- Broader representation of your user base

- Can complete tasks independently

- Willing to provide honest feedback

Start recruiting immediately. Finding people who match your criteria and are available during your sprint window always takes longer than expected.

Day 1: The deep dive

Day 1 focuses on understanding the nuanced reality behind your assumption. You’re collecting data as well as building empathy and context that pure analytics can’t provide.

Morning: Launch and learn

- Start with unmoderated testing to begin data collection immediately. While users complete tasks independently, you’re preparing for more intensive moderated sessions.

- Conduct 3-4 moderated sessions back-to-back with short breaks between each. This intensive format helps you spot patterns quickly while maintaining energy and focus.

Key insight: Don’t treat these as separate research streams. Use emerging patterns from unmoderated data to inform questions in your moderated sessions. If 60% of users are abandoning at step 3, explore that specific moment in your conversations.

Afternoon: Start recognizing patterns

Continue moderated sessions while actively monitoring unmoderated results. Look for convergence between what people say in interviews and what they do in tasks.

Spend 10 minutes between each moderated session reviewing unmoderated data. If you notice unexpected behavior, probe those moments in upcoming conversations.

Example: If unmoderated data shows users clicking on inactive elements, ask moderated participants what they expected to happen when they clicked those spots.

This iterative approach turns your sprint into an adaptive investigation rather than a rigid data collection exercise.

Evening: Do an initial synthesis

Review AI-generated insights from Loop11’s pattern recognition. The platform identifies themes, emotional peaks, and behavioral patterns that might not be obvious during individual sessions.

Team check-in meeting: What patterns are emerging? What surprises have you discovered? Are you seeing evidence that supports or challenges your assumption?

Don’t aim for final conclusions yet. You’re establishing a shared understanding of what you’re learning before Day 2’s deeper analysis.

Day 2: Clarity mining

Day 2 transforms observations into decisions. The goal isn’t perfect understanding but sufficient insight to improve your product immediately.

Morning: Finish collecting all the data

Finish remaining moderated sessions with focus areas informed by Day 1 patterns. If themes are already clear, use final sessions to explore edge cases or validate preliminary insights.

Allow unmoderated testing to complete and begin comprehensive analysis using Loop11’s AI capabilities. Look for statistical validation of patterns you observed qualitatively.

Afternoon: Final synthesis and strategy

Connect qualitative and quantitative insights. Where do interview themes align with behavioral data? Where do they diverge? The gaps often reveal the most important insights.

Team synthesis session: Focus on three critical questions:

- Did our assumption hold up, or do we need to change direction?

- What user behavior most surprised us?

- What’s the smallest change that would have the biggest impact?

Resist the urge to analyze every interesting detail. Focus on insights that directly relate to your original assumption and can influence immediate product decisions.

Evening: Plan the implementation

Prioritize insights using the impact-effort matrix:

- Fix this week: High impact, low effort (copy changes, simple UI adjustments)

- Fix next sprint: High impact, high effort (workflow redesigns, new features)

- Maybe later: Low impact, easy wins (minor improvements)

- Forget it: Low impact, high effort (delete from backlog)

Don’t end your sprint with just plans. Implement at least one small improvement based on your findings. This proves the process works and maintains momentum.

Our best UX research sprint tips

Post-UX research insights tend to die slow deaths in Slack threads and forgotten Google Docs. Teams spend 48 hours gathering user feedback, then watch it fade into irrelevance because nobody knows how to turn observations into action.

The difference between research that changes your product and research that collects dust comes down to how you handle the transition from “we learned this” to “we’re doing something about it.” Here’s how to bridge that gap. ⬇️

Set yourself up for success before you start

The quality of your research outcomes is largely determined before you conduct your first user session.

Get these foundations right, and your sprint insights will be clear and actionable. Get them wrong, and you’ll spend two days generating confusion instead of clarity.

Make your hypothesis crystal clear

Before you recruit a single participant, write down exactly what you’re testing in language simple enough for a 10-year-old to understand..

Instead of: ❌“We want to understand user sentiment around our value proposition and how it relates to their decision-making process”

Try: ✔️“We want to know if users understand what problem we solve and whether they care enough to pay for it”

If you can’t explain your research question simply, you probably don’t understand it clearly enough to get useful answers.

Plan for pattern recognition, not individual stories

One user’s experience is an anecdote. Three users having the same experience starts to look like a pattern. Eight users struggling with the same thing is data you can act on.

Plan your participant numbers with pattern recognition in mind. For most startup sprints, that means:

- 5-8 moderated sessions to understand the “why” behind user behavior

- 15-25 unmoderated participants to validate patterns at scale

Document your assumptions before they bias your findings

Every team brings hidden assumptions to their research. The founder assumes users care about the same features they do. The developer assumes users think logically about workflows. The designer assumes users notice visual cues.

Write down these assumptions before your sprint begins. Not to eliminate them – that’s impossible – but to watch for them during research. When you catch yourself thinking “users should understand this,” check whether that’s what you’re actually seeing.

Use the two-observer rule

Have two team members independently watch the same user sessions and take notes separately. Then compare what each person noticed.

When you both spot the same pattern, you’ve found something worth acting on. When you see completely different things, you’ve found something worth investigating deeper.

Look for the gap between words and actions

Users will tell you they love a feature, then never use it. They’ll say a process is confusing, then complete it successfully. They’ll claim they want more options, then ignore the options you give them.

The most valuable insights often live in these gaps between what users say and what they do. Pay attention to moments when user behavior contradicts their verbal feedback – that’s usually where you’ll find the truth about how your product actually works in practice.

Document in real-time

Research insights are like dreams – vivid in the moment, fuzzy an hour later, and gone by tomorrow. Capture your observations immediately, while the emotional context is still fresh.

Use Loop11’s timestamp features to mark moments when users show confusion, delight, or surprise. Write brief notes about what you’re seeing as it happens, not during breaks between sessions. Your future self will thank you when you’re trying to remember why that one moment felt significant.

The bottom line

Traditional research costs weeks of time plus analysis overhead. Building the wrong feature costs months of development plus opportunity cost. Losing users to poor UX costs customers plus reputation damage.

A 48-hour UX sprint costs two days and delivers insights that can prevent all three scenarios.

Teams using rapid validation cycles consistently outperform those relying on extended research or gut instinct. They iterate faster, ship more relevant features, and build stronger product-market fit through continuous user feedback loops.

Ready to validate your biggest assumption in 48 hours? Start your Loop11 free trial today and transform guesswork into evidence-based product decisions.

![]() Give feedback about this article

Give feedback about this article

Were sorry to hear about that, give us a chance to improve.

Error: Contact form not found.